Wednesday, April 30, 2008

Showing the Extra Stuff

I recently came across a neat-looking equivalent of that sort of thing while catching up on various world events on BBC News' site last week. BBC News' articles increasingly have sidebars, popup windows, small videos, etc., which add extra substance or detail or visual examples to complement the text of their articles. While reading one article about the chaos in Zimbabwe and the international reaction to same, I found the infobox midway through the article to be a really good example of this sort of thing. Using nothing but very basic HTML (check the page source!), the article manages to briefly describe and illustrate the stances of ten African countries near Zimbabwe not only clearly, but in a way that only takes up a few square inches of screen real estate. Those who don't want to follow up can just keep scrolling through the article, and those who do can get a decent amount of information (by news-article standards) easily, compactly, and with minimal requirements for outside software (like the generally-overdone use of Flash for just about everything).

I can't argue much with the timing of coming across that kind of example (or at least coming across it while equipped to notice it), in any case. Having recently finished one educational project as part of the public history program, setting off on another as part of a summer internship, and tentatively putting together material for a third major one in the fall, I've had the presentation of supplemental information on my mind for awhile. I generally think it's better to present more information than less; too much rather than too little. At the same time, I know full well that if I'm erring on the side of excess, doing so in a manner that isn't overwhelming is kind of important. This one does that job quite well, and it's got me thinking of ways to adapt it to various purposes in my current and future projects.

Monday, April 21, 2008

Eye candy and the creation thereof

To that end, I'm trying to dig up some resources on visualization in interactive projects - stuff as much on a theoretical/technical level as anything else, sort of like the interaction design textbook we were using in the digital history course but more focused. A friend of mine out west has worked at BioWare for awhile - and who is thus completely surrounded by interactive visualization of the highest order - less recommended than demanded I check out Colin Ware's Information Visualization: Perception for Design as a starting point. I plan to pillage it from Western's library tomorrow.

Newbie to the field on any kind of detailed level as I am, I'm curious as to what else could be out there. Is anyone reading this who's familiar with the field aware of any other resources I might want to try checking out?

Saturday, April 19, 2008

Here, have a neat gadget

A stroll around the ancient city of Pompeii will be made possible this week thanks to an omni-directional treadmill developed by European researchers.

The treadmill is a "motion platform" which gives the impression of "natural walking" in any direction.

The platform, called CyberCarpet, is made up of several belts which form an endless plane along two axes.

Scientists have combined the platform with a tracking system and virtual reality software recreating Pompeii.

The bulk of what I have to say about this one is "Cool!" coupled with a big list of other places I'd like to see something like this used with, so I think I'll leave it at that for now.

Wednesday, April 16, 2008

Information Wants To Be Anthropomorphized

In my last post I spent awhile talking about the growth of amateur culture from a fairly niche sort of thing to a broader - and far more accessible - phenomenon. Considering the extent to which we've been throwing terms like "MySpace Generation" or "Youtube Generation" around in the last several years I think it's pretty safe to suggest that the Net might have had something to do with this. It makes sense to suggest that the Net is going to shape the interpretation and presentation of even traditionally-academic subjects: it's utterly ubiquitous in the developed world these days, and large swathes of its original incarnation developed out of academia in the first place anyway.

In my last post I spent awhile talking about the growth of amateur culture from a fairly niche sort of thing to a broader - and far more accessible - phenomenon. Considering the extent to which we've been throwing terms like "MySpace Generation" or "Youtube Generation" around in the last several years I think it's pretty safe to suggest that the Net might have had something to do with this. It makes sense to suggest that the Net is going to shape the interpretation and presentation of even traditionally-academic subjects: it's utterly ubiquitous in the developed world these days, and large swathes of its original incarnation developed out of academia in the first place anyway.While it's weird to think so these days, with the Internet's recent proliferation of just about any kind of frivolity, inaccuracy, or Thing Man Was Not Meant To See, the Internet was once, at its heart, a fundamentally academic institution. Its culture was largely that of the American computer-science student community, with rhythms organized around the academic year. Many still use the term "September that Never Ended" to describe all time since September 1993 - referring to the annual influx of newbies with little awareness of proper netiquette which became an unending flood that fall - and speak with almost a religious hope of the prophesied October 1, 1994, which has yet to arrive. "What's your major?" was a question equivalent to "what's your sign?" and so on.

While it's somewhat obvious that the bulk of online culture is no longer centered around those kinds of patterns, a core of them is still around and at least influences a large portion of the Net. Large swathes of the parts of the Net with a useful signal-to-noise ratio have much in common with the Net's original large-scale discussion system, Usenet, before its collapse to in the mid-1990s. Usenet, and its current successors[1], had a combination of an anything-goes approach subject-wise and a lack of any barriers to participation beyond simple access to the network in the first place. The results varied from the completely silly (such as most of the thousands of groups in the "alternate" hierarchy[2]) to general-interest groups (such as rec.pets.cats), to artistic communities (such as alt.fiction.original, which a couple of friends and I created in the mid-90s as an escape from the ubiquity of horrific fanfiction) to more academic topics (such as sci.archaeology).

Unless someone was already known by name otherwise - a known scholar in a given field, or one of the various alpha geeks of the Internet at the time[3] - there were no clear

credentials or pecking orders to go by other than reputations as established in a given forum. It resulted in an odd sort of situation where someone using a real name and some postnominals and someone else with an elaborate and obvious pseudonym would generally end up on an equal footing in discussion from the get-go and diverge based on shown competence alone. (As an example, someone I knew some years back, who wound up hugely respected in the spam-fighting field, was - and to my knowledge, still is - known only as "Windigo the Feral.") While a lot of people were, and still are, dismayed at the potential for this kind of anonymity, many others found (and find) it liberating.

credentials or pecking orders to go by other than reputations as established in a given forum. It resulted in an odd sort of situation where someone using a real name and some postnominals and someone else with an elaborate and obvious pseudonym would generally end up on an equal footing in discussion from the get-go and diverge based on shown competence alone. (As an example, someone I knew some years back, who wound up hugely respected in the spam-fighting field, was - and to my knowledge, still is - known only as "Windigo the Feral.") While a lot of people were, and still are, dismayed at the potential for this kind of anonymity, many others found (and find) it liberating.This kind of digital culture - gleefully eccentric, technically minded, generally libertarian and explicitly meritocratic - and its descendants have had a tremendous influence on a lot of digital-humanities concepts, between their broader impacts on digital culture in general and the fact that a lot of people working in the digital humanities tend to have strong computing backgrounds. This kind of community was the one which is the source of the whole open source movement, the source of any number of tools I've mentioned on this blog. Zotero, Blender, Celestia, and Freemind are just four examples that immediately come to mind, and each is a program which came out of this movement which I've managed to turn to one historical purpose or another at least once this year. Each of those already exists as commercial software, but they have the little advantage of being free. When compared to Endnote ($300), Lightwave ($1100 - thousands of dollars cheaper than when I last checked!), Voyager 4 ($200) or Mind Manager Pro ($350) , I know full well where my preferences are going to lie - particularly since both sets of software are often similar or identical in terms of capabilities, even when correcting for open-source software's tendency towards lousy interfaces. There are moves to unify these sorts of things towards educational purposes, even in the realm of physical computers themselves. And to make it even better, should I feel moved to and have the capabilities I can, as Dr. Turkel put it, feel free to fix anything that is bugging me.

This sort of attitude - that I (and you!) should have a say in what's going on if we're able to do so in a competent manner - is, I find, a healthy one to have in the first place, and fairly central to a lot of the ideas of what academia was meant to be to begin with. I don't like gatekeepers who don't have a really good reason to be such, like the trauma surgeon I mentioned in my classically unfair example in my previous post. When you combine it with the capability for easy exchange of information modern technology tends to give us, it's only a short trip to a whole crop of notions like folksonomies, the pursuit of databases of intentions, turning filtering techniques meant to catch spam into more sophisticated information-extraction methods, and any number of other things. Between the basic potential of these sorts of techniques and the willingness of so many pursuing them to share their research and tools all over the place (notice on that netiquette link above that one of the items was "share your expertise?"), I think it's a pretty exciting time to be an historian. Dealing with the mass of information currently sitting around due to electronic archiving's one thing; turning a lot of these tools on the stuff we've traditionally been condemned to looking through via microfiche readers is another entirely.

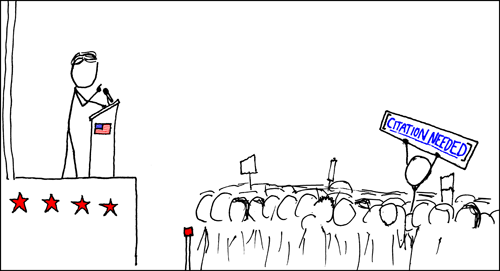

There are potential problems because of the Net and its cultures as a new base for scholarship as well, of course. Pretty much the standard debate to do with the Internet's implications for scholarship is the reliability/verifiability issue. This is something I've discussed before on this blog, and will probably continue doing so for some time; it's a pet topic of mine. Even a cursory reading of what I have to say over my time writing here should make it clear that I fall clearly in the "digital resources are useful and often reliable" camp - and, more to the point, that I despise the casual elitism which says one must need a tangible degree to even attempt to contribute to knowledge on issues. Despite that, I have my own problems with content on the Net and try to turn a critical eye onto it. Besides, the reliability issue isn't limited to history online. Academic history isn't infallible, sometimes having a real problem being uncritical about sources (as I found out during my undergraduate thesis, watching Thomas Szasz get invoked as a reliable source about mental illness several times). Public history has its own problems, as evidenced by controversial manipulation of exhibits at the War Museum or Smithsonian, or the mendacious silliness that is documentaries like The Valour and the Horror. There's differences of degree, but we do need to take the beam out our own eye on that account, no?

While I have a thorough appreciation for the attitudes of openness and general egalitarianism which were fundamental parts of digital culture since well before I started seeing it in the early nineties, I think they can be taken too far or too blindly at times. As an example, I'm part of that rather small subset of big-on-digital-stuff historians who doesn't like Wikipedia, or more to the point, the predominant intellectual culture on it. Jaron Lanier echoes a good chunk of my views in his essay on Edge (another site on my "everyone should go here sometimes" list), though he takes his objections a little further than I would by invoking terms like "digital Maoism." On a more scholarly and financial level, there's some debate over the issue of open-access content for traditional scholarship, with arguments ranging from the silly ("socialized science!") to more

These sorts of issues are going to be hovering around academia for some time. The "problem" is that they will do so regardless of whether academia goes down the path of open access or Wikiality or whatever else. Many institutions are cheerfully doing this kind of thing, with some advocates going so far as to make religious allusions through the use of terms like "liberation technology."Those who don't, however, will still have to respond to it in one way or another, as it becomes more and more obvious that simply saying "don't use online sources" is not an acceptable approach.

The interesting aspect of this shows up when we start to see a fusion of the different cultures I've been talking about lately. We've got that amateur culture, big on do-it-yourselfing and drawn into their various pursuits out of a genuine desire to do something; we've got the loosely meritocratic and not-so-loosely anarchistic elements of digital culture, with its appreciation of openness in design or knowledge and growing use of hugely collaborative projects; we've got the traditional academy, feeling around the edges of the potential of this merger. Of course there's room for uneasiness between the three elements, but at their heart all three are (in theory) built around an appreciation for knowledge and ability.

[1] - Google has since largely revived Usenet with its establishment of Google Groups a few years ago (and the newsgroup links are courtesy of them); a chunk of the "original" Internet is once again out there, active, vibrant and wasting bazillions of man-hours per day. Huzzah!

[2] - This image from Wikipedia's (I know, I know) article on Usenet, which illustrates the meanings of the different "hierarchies" of Usenet newsgroups, illustrates the alt.* hierarchy perfectly in the eyes of anyone who remembers the original thing.

[3] - "Anyone ... [who] knows Gene, Mark, Rick, Mel, Henry, Chuq, and Greg personally" is one of popular definition of such people. The Net used to be a small world.

Tuesday, April 15, 2008

For Its Own Sake

In terms of my historical pursuits, and many others besides, I consider myself an amateur and very much hope to stay that way.

This has little to do with hoping to maintain humility in the face of a vast amount of knowledge, or recognition of living in a postmodern world where I'm doomed not to really know anything about anything, or anything else along those lines. (There's an element of truth to each, of course; if there's anything approaching completion of an advanced degree in history has taught me, it's precisely how little I know.) What I'm talking about is a rather older definition of the term.

The word "amateur" doesn't exist merely as an antonym to "professional" (or, more insidiously, "competent"). Being an amateur, ideally, is being an amator: doing or pursuing something out of a love of the subject and a desire to pursue it, with vocational aspects as secondary. This doesn't necessarily require years of formal training in a discipline, though I obviously think that helped in my own case. Sometimes that lack of training can be worked around, often in very surprising ways, by dedicated amateurs, however.

Consider chemistry sets. As we have been Thinking Of The Children rather more than is necessary for the last several years, they have, along with rather too many other things, fallen into disuse and obscurity to the point where it’s somewhere between difficult and impossible to find a “real” one. Depending on the safety level of various science-related hobbies, this is probably sometimes a good thing, but on the whole I think we’re losing something when we eliminate those kinds of incentives for learning. Others seem to agree with me. As a reaction to this sort of thing, many people and, rather likely, no shortage of kids have set about creating their own chemistry sets or ad-hoc equivalents thereof from scratch. However, the supplies needed are themselves difficult to impossible to find for the same reason a lot of the sets are.

This hasn’t prevented Darwin’s pager from being set off, however. Rather than abandon their interests due to the difficulty of getting modern materials, quite a few would-be amateur chemists have gotten together and formed their own communities [1] in which they’ve gone back to older textbooks – which often presuppose far less equipment or financial resources – in order to learn how to create the reagents or gear they need from scratch. This probably upsets the Department of Homeland Security or Public Safety Canada, but on the other hand just about anything does anyway, and I like the existence of environments like these where people can get together to learn about things.

That’s simply a more spectacular example of what happens when a bunch of amateurs get together and decide to pursue their interests than most. Similar things abound on more conventional, less high-entropy levels: ham radio operators, historical reenactors, astronomers and so on have long since provided an avenue for this sort of thing. Thanks to the various services and methods of communication brought about by the Internet, these cultures are changing in several ways. Perhaps the most obvious impact is that groups of amateurs are no longer restrained by geography. While the Hamilton Amateur Astronomers are rather obviously connected to the city of Hamilton, the members of Science Madness are less concerned about being in the same place. This can often be a hook for people who have an interest in one thing or another, but found no avenue or reason to seriously pursue it due to a lack of resources, a lack of knowledge, or a lack of like-minded individuals to create some nice positive feedback loops which encourage further learning or practice. I know I’ve picked up or maintained several interests as a result of encountering such groups, and I’m sure several of those who may be reading this have run into similar sorts of things.

The spread of these amateur cultures has been seen as a mixed blessing, of course. There are areas in which a lack of formal training or instruction really is a problem. Amateur historians or even chemists can often make those of us within the formal discipline twitch, but I’d be much happier dealing with either than I would an amateur trauma surgeon. [2] On a less life-and-death level, dealing with matters involving creativity, activism, history and so on, there are more shades of grey and room for vigorous debate, which often generates more heat than light but winds up illuminating nonetheless. At this point, in any case, there is no shortage of subjects, sites and organizations where anyone - amateur or expert - can dive into something without necessarily short-changing themselves.

A good chunk of my current history-related amateur geekery involved 513 over the last little while, as a component of our major class project where we were mounting a series of interactive exhibits built around the (very) general theme of "the sky." (My group was focused on the space race in general, and Sputnik in particular.) I've been interested in interactivity and visualization as tools to make exhibits more interesting for awhile, and thought I'd try to find some good visualizations and then ways for people to engage with them. The visualization part was a breeze, given the subject: I made use of Celestia, a fantastic - and free! - space simulation program that anyone interested in space needs to download right now. (I mean that. Why are you still here?)

For interacting with it I had planned on doing something a little more esoteric. Working with the SMART Board project for the main public history course got me interested in the idea of interactive whiteboards in general, especially when we got to the point of pushing their capabilities' limits somewhat. I like touchscreens in general, as a way of getting over that boring keyboard-talks-to-the-computer tradition. [3] To that end, I thought I'd try to build an interactive whiteboard in a roundabout way with a combination of a projector hooked up to my desktop and a sensor built from a homebuilt IR light pen and a Wii remote, inspired by Johnny Chung Lee's blog "Procrastineering."The idea was to project the image onto a wall, which could then be manipulated with one (or, preferably, two) pens to rotate, zoom, etc. the final product. I got partway through the process before the suddenly-Schrödingerian status of our having a projector - and the end-of-semester crunch season in general - caused theWii component of the whole project to end up by the wayside, forcing us to settle on the visualization and some audio I drew together towards the end. The whiteboard shall exist in time - indeed, it must, since it's going to remain a splinter in my mind until I get the thing working - but it has been relegated to a summer project.

The thing is, I wouldn't have come up with that kind of idea in my own, and would have been hard-pressed to get a hold of some of the basic theory to follow through on it, without these kinds of established and tolerated amateur cultures towards various esoteric fields of knowledge existing in the first place. While there's obviously going to be concerns about significant aspects of them - reliability for a page on historical maps, safety issues on amateur science sites specifically devoted to "fires and loud noises," etc. - I do like the variety, openness, and sheer weirdness of a lot of these sorts of resources, and I approve of living in a world where it's fairly easy to take just about any hobby or discipline and find a thriving community of engaged, helpful devotees already involved in it at any level from greenest beginners to world-renowned experts.

This is getting far more tome-ish than I originally planned, so I think I'll be belatedly polite and cut it off here. Next I want to talk about the digital aspects of this sort of thing. Between the Internet's ubiquity, its ability to help spread and coordinate amateurs of all skill levels from around the world, and its own distinct cultures as regard ideas and the transmission thereof, there are some pretty profound implications - and challenges - for historians to consider.

[1] – I was casting about earlier for some URLs connected to this, as I remembered coming across mention of it via a couple of news stories and blog posts a year or so ago. Upon asking a friend who had pointed me at said stories in the first place if he remembered where we'd seen them, he pointed me at this URL, a dedicated domain to the subject, instead. I (foolishly) expressed surprise at that and was informed that I "underestimate the Internet at [my] peril. ;)" I do indeed.

[2] – I’m entitled to one or two shameless straw men per semester, so I don’t feel too guilty about this one.

[3] - I was also inspired on this note by Jeff Han's magnificent presentation at the TED Talks in February 2006, in which he demos an intuitive interface that gets rid of mice and keyboards altogether. I want three.

Sunday, April 13, 2008

Mindsets in the Digital Humanities

It’s easy to get lost in the technical aspects of digital humanities. After all, isn’t the “digital” part of the term fundamental to the condition of the discipline? It would seem, anyway, that that was the core part of this whole area of study. Most of the elements we’ve been studying in the course of this year’s Digital History course at Western have had that technical nature about them. These have varied in complexity from subject to subject, of course; there is little that is really technical in a computing sense about how not to design web pages, but discussions of spam filtering techniques require some knowledge of computing theory to grasp, and their historical connections aren’t immediately relevant. All of these, however – and most of what we’ve discussed in the last year – have in common that substantial “digital” focus. The impression at first glance is that we’re talking about technology and history, not technology and history or technology and history.

It is important, however, to keep the “humanities” half of “digital humanities” in mind. The technologies we use these days, either for historical research and presentation or for any number of other uses, are – for now, mostly – designed and used by people. As we know as historians, people who aren’t mathematicians are likely to bring quite a lot of outside baggage into their work. The tools, theories and outputs used in the digital humanities – and anything else, for that matter – are going to reflect certain cultures, backgrounds and key assumptions inherent to those who produce them. In my previous post, I discussed Bonnett’s use of the term “hieroglyph” to refer to interfaces or tools which are too difficult for layfolk to readily understand. This can also be applied to the mindsets of people who produce those tools, which can either provide another layer of obfuscation or simply be the source of the initial problems. This can produce some elements of culture shock on top of the learning curves involved in using new technologies.

As an example: in class about a month ago, we were talking about locative technologies and ubiquitous computing. In the middle of the readings for that day was an article by Bruce Sterling on “blobjects” which started something of a stir in the discussion. A lot of this seemed to be about Sterling’s writing style in his article. Fair enough: technophile and spec-fic geek though I am, even I found it hyperbolic, annoying and laden with for-its-own-sake jargon. But there was some substantial context behind the words being written in that article. Sterling is a science fiction writer; not only that, but one of the writers whose work helped define and establish one of the most computer-centric fields of science fiction, cyberpunk, as a thriving genre. He was speaking at SIGGRAPH, a prestigious conference on computer graphics and research on same. There is going to be a different set of approaches, of expectations, of worldviews in a group of people who are likely to non-ironically talk about The Future (with Emphatic Capitalization, of course) than there would be for those who tend to get published in the Journal of Hellenic Studies. (There’s also going to be certain expectations from the audience on the author. I’m certain Sterling delivered on that front, but I also wasn’t the audience so I can’t be sure.)

So where am I going with all of this?

The plan here is to get a series of four or so posts up in the next few days, where I'll try to look at some of the approaches and mindsets out there which strongly influence different aspects of digital humanities (while also trying to draw together a bunch of material from my time in this course and program). I’m convinced a combination of parts of the digital cultures which developed in academia and migrated onto the net, and the rebirth of a broader amateur culture from the last few years, provide a lot of the foundation beneath digital humanities in general, and are only going to influence them more as they become more popular over the next several years. People don't, of course, need to be fully involved in, or even that aware of, what's going on behind the scenes of the tools they use in their day-to-day lives or projects in order to use said tools. It helps, however, especially when it comes to encountering concepts which are relatively new or strange, such as a growing emphasis of technology in the presentation of history and other humanities.

Friday, March 14, 2008

Omnia Mihi Lingua Graeca Sunt

Yesterday, in a fairly crowded Weldon, I found myself in the nice and ironic position of being stymied by the self-checkout system to the point where I gave up and resigned myself to being stuck in front of a line of people, each of whom was checking out an entire floor of the place. The fact that the books I was trying to check out were a Marshall McLuhan book and another book on using technology to facilitate learning, of course, helped the inherent awesomeness of the whole situation.

After I got bored of looking silly and checked the things out in the manner of the previous century, I got to thinking about the learning processes for various different things in general, and technology in particular. It’s been bouncing through my head a lot this semester, which is probably a good thing when one of my textbooks is on interaction design. Quite a bit lately, between my making heavy use of Blender for various personal and school projects, figuring out the foibles of the SMART Board software for another project, and considering seeing if I can pick up Python as well. Only one of the three really strikes me as especially esoteric at this point, though the two I’ve got some experience messing with these days have each routinely set me off on some proper rants.

I’ve been reading Raymond Siemens’ and David Moorman’s Mind Technologies: Humanities Computing and the Canadian Academic Community for the last few days. It’s more or less what the title implies – a survey and discussion of the state of technology use in academia in the country, in the form of a series of essays or chapters by various scholars in different fields, discussing how they’re applying technologies to their various projects. One of the chapters was by John Bonnett, whose work I’ve written about here before. The chapter mainly discussed his Virtual Buildings Project, although the secondary focus of it was on, as he puts it, “how hieroglyphs get in the way.” Bonnett defines “hieroglyphs” in his chapter not as the pictographs used by Egyptians, Mayans, Mi’kmaq and so on used, but rather expanded the concept towards any sort of system which is unnecessarily complex or abstruse. When we encounter something we just can’t figure out ,either due to a lack of knowledge on our part or a lack of effective design on the part of what we’re trying to use, it might as well be in another language.

Part of learning these other “languages” is, to be fair, our own burden to deal with. There are very few things out there which one can’t figure out, but quite a few that they won’t figure out, due to frustration, a lack of time or energy, and so on. And the more “languages” one knows, the more they can pick up as time goes by. With too little background, just about anything can come across as Linear A, frustratingly indecipherable despite our wishes or efforts.

But that doesn’t excuse opaque interfaces or user-hostile design in the process of developing whatever you’re working on, be it a scanner, a piece of graphics software, a book, or a simple tool. Back in December, Carrie asked, “why can there not be more formulated programs for the not-so-computer-savvy historian?” (emphasis added.) It’s a good question, one which should be asked more often and more forcefully than is done these days. There’s a lot of incredibly powerful tools for any number of tasks just sitting out there – including the tools to, if need be, make additional ones – that are seeing very little use either because they’re hard to find or they’re difficult for the uninitiated to get their heads around. As I said above, part of the responsibility to deal with that falls on those of us who want to use these things: a certain minimum of effort is necessary to learn how to do anything, of course. At the same time, not everyone is going to have a background in computer science or the like, especially if they’re in the humanities.

Rather than use this lack of background as an excuse to avoid the entire field, we ought to be putting some effort into finding what’s out there that can be readily used and making it known to others. We could be finding tools that are out there and suffer from these learning curve programs and try to figure out how they can be improved through better interfaces, documentation, and so on. And probably most ambitiously – but most rewardingly – we could see what we can do about making, or at least planning or calling out for, some of those tools ourselves, as we (usually) know what we want in such things. Each of these is necessary for those of us wanting to incorporate new tools into our work as historians (whether public or academic), and aspects of each are at least feasible for any of us.

Wednesday, March 12, 2008

On Haystacks

“Oh, they just look things up online now,” people often say of students (or maybe just undergraduates) these days. As loaded statements go, it’s a pretty good one. The implications are manifold. It implies that Kids These Days lack the work ethic of us Real Scholars - people who, of course, never had to scramble to fill the necessary sources on a paper or started anything at the last minute. It implies that this will hasten the Imminent Death of the Library, or that it necessarily requires a decline in the quality of the students’ scholarship. The idea that electronic sources are intrinsically bad or unreliable notwithstanding – a concept, it should be obvious by now, which I reject out of hand – there’s another implication about the above quote which matters rather more to me. The fact that people are willing to look beyond the world of monographs and journal articles, provided they don’t leave those behind entirely, is a good thing in my opinion. What does worry me is not the fact that students will “just look things up online” as much as the question of whether they can.

To say there’s a lot of material out there is something of an understatement on the order of saying “setting yourself on fire is often counterproductive.” A lot of this material is nothing short of fantastic for casual, professional or academic reference, and really ought to be more obvious or widely-used than it is, either for the purposes of casual browsing or for actual study. (This isn't even taking amateur culture into account, something I want to address in a future post.)

The difficulty arises from actually finding the stuff. A given resource, once found, may be laid out in an intuitive, accessible manner (or not), but getting there in the first place can often prove difficult. The Net as a whole is anarchic, indexed mainly in an ad-hoc manner when it is indexed at all, with the indices themselves usually hidden under the hoods of search engines (Google Directory and similar sites notwithstanding). The visible indices that do actually exist are often obscure, arcane or both. Much like another arcane system of managing information many of us use as scholars often without a second thought, these systems can be adjusted to and eventually mastered.

In searching for materials online, there’s usually a lot more involved than simply plugging a word or three into Google. This can often work – searching for Dalhousie University is not likely to be a challenge, and the first page of searches for nearly anything is going to bring up a Wikipedia article or two (although that does bother me, and I'll rant on it later). On the other hand, ambiguity or obscurity can cause otherwise simple searches to become annoyances – the first five hits when searching for Saint Mary’s University point to five separate universities. (Of course, in my entirely unbiased opinion, the One True SMU is the first hit.) Context makes it obvious which is which in cases like these, but that is not always going to be the case.

As part of a project I’m working on – actually, a piece I intended to write here but which has spiraled out of proportion like some kind of even-more-nightmarish katamari – I’ve got a small pile of books sitting on a shelf at home (or in my spine-ending backpack here). One of them is Tara Calishain and Rael Dornfest’s Google Hacks, part of the vast horde of O’Reilly reference books. A lot of it is more or less what it sounds like – a series of ways to game Google’s search engine and its other applications to do various useful, entertaining, or malign things – but the main thing which interested me about it is the fact that it’s a 330-page book on the detailed use of an online tool which is so utterly ubiquitous at this point as to either be invisible or supposedly simple. Recognizing even a handful of the points raised by the book turn a “simple” search engine into a fairly complex and powerful tool whose capabilities aren’t recognized by the majority of people which use it on a day to day basis. Now, I believe that just about anything can be used in a variety of different, useful and creative ways, but as aware as I thought I was about what you could do with Google, quite a few things in this book managed to surprise me. It leaves me wondering what else is out there, either useful for its own sake or for direct application into my own fields of interest.

I don’t think the problem is the fact that people are simply looking things up online at all, but I do think a lot of them are probably doing so poorly, and lack the basic knowledge to fix that. Tempting as the “I feel lucky” button may be, a search for anything moderately obscure or ambiguous is going to have little success or yield problematic results unless the searcher knows how the game is supposed to work. Having an idea of what you’re looking for is by far the most important aspect of looking for anything, whether online or off. If that condition is satisfied, however, the next step of knowing how to find information can often be a challenge as well. People might scoff, but using something like Google is a skill, and not simply a form with a button attached, and I think it should be taken less for granted than it currently is. Despite the intimidating nature of books like Google Hacks, the basic gist of how to use it, or other forms of computer-assisted searching in general, is something that can be taught or learned readily enough. A little bit of getting one's head under the hood of how these engines operate, and a little bit of understanding about how the system they are meant to navigate works, can go a huge way.

I don’t see it as the Internet’s fault whenever someone uses it to receive shoddy information, any more than I believe a library is at fault when someone checks out a book by Erich von Daniken or Anatoly Fomenko and uncritically takes them at face value. Rather than dismiss the utility of using these kinds of resources at all – something which I consider futile at the very best – we really need to pay more attention to them, learn how they work (for Google at least, this is far easier than many may think), and teach others how to manage and interpret the results they find.

Thursday, December 13, 2007

eNemy At The Gates

- "It's an online source. So what? They're just as good as conventional ones."

- "Online sources are intrinsically bad should never ever be used."

There are usually a few, pretty predictable arguments presented when people argue for an automatic rejection or disdain of online sources. I'm going to address the most common ones I've run into, in order from most to least absurd. (I'm not going into arguments which go so far as to dismiss government or university sources for being online; that, I hope, is too self-evidently ridiculous to warrant refutation.)

Objection the First: "It's too difficult to track down references. You can't cite Web pages as specifically as you can books or other materials: there's no page numbers!" The crux of this argument is that online sources are not print sources - duh - and therefore are too difficult/unreliable/etc to source to bother citing, because of inconsistent layout and the fact that it may not be immediately obvious where one may get all the information needed to do a proper, full citation. A number of simple solutions exist here. If all the information isn't there, then that's fine; it's not your fault if the specific author or organization behind a Web site isn't explicit enough, for instance, provided most of the information (and the location itself) are there. As far as citing specific parts of a site, of course Web sites aren't going to have page numbers. They aren't books. I don't see a problem here. On the other hand, most sites out there - and all more static media like PDFs - are organized into small enough chunks that you can usually narrow down a cite to a moderately specific page. (They're also often equipped with anchors, which are great in properly-designed sites.) If one can't due to a large block of text, browsers come equipped with search functions for a reason, at least if one's simply concerned with confirming that the information's there.

There is a real problem with some aspects of online sources. "Deep Web" materials - material which is usually procedurally generated, only accessible in its specific form through cookies, searches or other forms of interaction, and so on, are considerably more difficult to get a hold of. As if that wasn't bad enough, they're growing: the Deep Web is hundreds of times larger than the "surface" one right now. There will have to be mechanisms to deal with this in time; handling them on a case-by-case basis is a bare minimum, however. I'm not convinced at the desirability of rejecting an entire field because of some slight inconvenience.

Objection the Second: "Just anyone can put up a Web page!" Oh ho! Yes, this is true - and so what? If you believe it's difficult to have a particularly absurd piece of work show up in book form - or, in the right circumstances, appear as a published article in an academic journal - then I have a bridge I'd like you to consider buying. This argument doesn't impress me at all, mainly because its main underlying assumptions - that "just anyone" can put something online, and that "real" repositories can effectively prevent people from getting their crackpottery in among them - are both flatly untrue.

Another implication of this claim bothers me considerably more. It is the claim, sometimes explicit but usually not, that the identity of a person making an argument has some bearing on the quality of the argument itself - or, indeed, is more important than said argument. This is a contemptible idea, built around a set of logical fallacies that all but the most sophistric freshmen are usually aware of. If we are talking about a world of debate and scholarship - and even amateurs can engage in either! - then these arguments should rise or fall on their own merits. An historical stance should be effective regardless of its creator, provided it stands up to scrutiny - but using its creators' identity as the sole point of that scrutiny is not an appropriate way to handle such things. The identity of a person can influence an argument to a point - after all, consistently good (or bad) arguments can imply more of either in the future - but in the end the effectiveness of a stance should be determined by, well, its effectiveness, and not its creator.

With that in mind, I also think it's fantastic that it's easier for people to put information up for all the world (or at least a specific subset of it) to see. The amount of lousy history - and economics, and science, and art, and recipes - will go way up as a result, but there's room for the good stuff as well. We shouldn't ignore the latter because of the presence of the former, any more than we should shun good archaeologists because von Daniken ostensibly published in the field. We're dealing with a medum here which allows people to do end runs around the gatekeepers for various fields. So what if things get somewhat nuts and over-varied as a result? Personally, I want to embrace the chaos.

Objection the Third: "Online material isn't peer-reviewed and therefore shouldn't be used." While this is often used synonymously with #2, above, it is a distinct complaint, and the only one of these three which I don't see as enirely without merit. While the first two complaints are ones of mere style or elitism, this is an issue of quality control. While the lack of (obvious) peer review - detailed criticism and corroboration by a handful of experts in a specific field - is indeed a problem, it is one which provides some good opportunities for the readers both lay and professional to hone some abilities.

A huge component of the discipline of history, on the research side of things, is the notion of critical examination of sources. Note that this is not the same as merely rejection them! We are taught to look with a careful, hopefully not too jaundiced, eye at any source or argument with which we are presented, keeping an eye out for both weaknesses and strengths. The things to which historians have applied this have diversified dramatically in the last several generations, moving out of libraries and national archives and accepting - sometimes grudgingly, sometimes not - everything from oral traditions to modern science to (as in public history) popular opinions and beliefs about the issues of the day or the past. It's a good skill, and probably a decent chunk of why people with history degrees tend to wind up just about everywhere despite the expected "learn history to teach history" cliche (which, of course, I plan to pursue, but hey!). Online sources shouldn't get a free pass from this - but they should not get the automatic fail so many seem to desire either.

To one point or another, we are all equipped with what Carl Sagan referred to in The Demon-Haunted World - find and read this - as baloney detection kits - a basic awareness of what may or may not be problematic, reliable, true or false about anything we run into in day-to-day affairs. There's semi-formal versions of it for different things, but to one level or another even the most credulous of us have thought processes along these lines. It's a kit which needs to be tuned and applied towards historical sources online - just like all other sources - and in a far more mature way than the rather kneejerk pseudoskepticism which is common these days.

(I compiled a sample BDK for evaluating online resources a couple of years ago as part of my TAing duties at SMU; once I'm back home for the holidays I intend to try to dig that up and I'll follow up with this post by sticking it here.)

The reflexive dismissal of sources of information based entirely on their media is not just an unfortunate practice. It involves a certain abdication of thought, of the responsibility to at least attempt to see some possibility in any source out there, even if it doesn't share the basic shape and style of academic standards. Besides, as I mentioned earlier, there are opportunities in this as well. The nature of online soruces isn't simply the "problem" that someone else didn't do our work for us, pre-screening them for our consumption ahead of time. Their nature is such that it underscores the fact that we need to be taking a more active role in this anyway. For the basic materials out there, it's far easier to vet for basic sanity than many might think - I did effectively show a room full of non-majors how to do it for historical sources in an hour, anyway - and giving everyone a little more practice in this sort of thing can't exactly hurt. In other words, we need to approach online sources with a genuine skepticism.

But guess what? This whole thing's just a smokescreen for a larger issue anyway. We're willing, indeed eager, to hold varying degrees of skepticism towards online sources, but why are we singling them out? Why the complacency as regards citations of interviews, of magazine articles, of books? If you're going to go swinging the questioning mallet, you should at least do so evenly, don't you think?

And on that note, I head off to be shoehorned into a thin metal tube and hurled hundreds of kilometers. I shall post at you next from Halifax!

Tuesday, December 4, 2007

Silently Posturing

Cooper's categories are described in terms of "postures," essentially their dominant "style" or gross characteristics which determine how users approach, use, and react to them. The first of these four postures is the "sovereign" posture: sovereign programs are paramount to the user, filling most or all of the screen's real estate and functioning as the core of a given project.

The second is "transient," and is the opposite of sovereign software both visually and in terms of interfaces. Intended for specific purposes, meant to be up only temporarily (or, if up for a long time, not constantly interacted with), transient programs can get away with being more exuberant and less intuitive than sovereign applications.

I realized my gut reaction of describing half the annoying stuff I use as daemonic when I realized that the third posture refers to daemon in the computing sense of the word rather than the more traditional gaggle of evil critters with cool names. (Computing jargon tends to come from the oddest places.) Daemonic postures are subtle ones, running constantly in the background but not necessarily being visible to the user at any given time. Daemonic programs tend to either have no interface (for all practical purposes) or tend to have very minimal ones, as the user tends not to do much with them, if anything. They're usually invisible, like printer drivers or the two dozen or so processes a typical computer has running at any time.

The final set of programs are called "parasitic" ones, in the sense that they tend to park on top of another program to fulfill a given function. Cooper describes them as a mixture of sovereign and transient in that they tend to be around all the time, but running in the background, supplementary to a sovereign program. Clocks, resource meters, and so on, generally qualify.

In the interest of this not being entirely a CS post, I should probably answer the initial request on the syllabus as to how it can affect my historical research process. I'm not sure, fully, but I'm also answering this entirely on the fly and and more concerned with how it should affect my process. At present, I'm not using many programs specifically for research purposes. Firefox and OpenOffice (which I use en lieu of Microsoft Office, moreso since that hideous new interface in Office '07 began to give me soul cancer), the main programs I tend to have up at any given time and which I obviously do a lot of my work in, are definitely sovereign program, taking up most of my screen's real estate. The closest thing I have to a work-related application that's transient is Winamp, which is usually parked in the semi-background cheerfully producing background noise I need to function properly. I don't make much use of parasitic programs due to a lack of knowledge of the options about them, mainly, and of course my daemonic ones are usually invisible.

The chunks of this I make use of are mostly a case of "if it ain't broke, don't fix it." I've got my browser, through which I access a lot of my research tools (including Zotero, the most obvious parasitic application I have, and the aggregator functions of Bloglines, the, uh, other most obvious parasitic application I have); I've got my word processor, through which I process my words; I've got Photoshop for 2D graphics work and hogging system resources; I've got Blender for 3D graphics stuff (much though I am annoyed by its coder-designed interface); I've got FreeMind, which is great for planning stuff out. I've no shortage of big, screen-eating sovereign applications, in other words, most of which do their often highly varied jobs quite well.

Some of these can wander from one form to another, of course. I spent an hour earlier this evening working with Blender's animation function to produce a short CG video. When I started the program rendering the six hundred frames of that video, I wasn't going to be doing anything else with it for awhile, and was thus able to simply shunt it out of the way. That left me with a small window showing the rendering process in one corner of my screen, allowing me to work in some other stuff, albeit slightly more slowly as the computer chundered away. Cast down from the throne, the sovereign program became transitorily transient.

What I'm wondering about now, though, are applications which fill the other two postures; stuff that you can set up and just let fly to assist with research or other purposes. An simple and obvious example of this sort of thing would be applications which can trawl RSS feeds for their user. Some careful use setting the application up in the first place - search, like research, is something which can occasionally take significant skill to get useful results - and you could kick back (or deal with more immediate or physical research and other issues) and allow your application to sift thousands of other documents for things you're interested in. Things like this are not without their flaws - unless you're a wizard with searches or otherwise incredibly fortunate, you're as likely as not to miss quite a bit of stuff when trawling fifty or five hundred or five thousand feeds. Then again, that's going to happen anyway no matter what you're researching in this day and age, and systems like this would greatly facilitate at least surveying vast bases of information that would otherwise take up scores of undergraduate research assistants to get through.

The information is out there; there just need to be some better tools (or better-known tools) to dig through it. Properly done, something like this would need minimal interaction once it gets going; you set it up, tell it to trawl your feeds (or Amazon's new books sections, or H-Net's vast mailing lists, or more specialized databases for one thing or another, etc.), and only need to check back in daily or weekly or whenever your search application beeps or blinks or sets off a road flare, leaving you to spend more of your attention on whatever else may need doing. Going through the results would still involve some old-fashioned manual sifting, as likely as not, but if executed properly you would be far more likely to come up with some interesting results than you would by sifting through a tithe of the information in twice the time.

Something like this could help get data from more out-of-left-field areas, as well; setting up a search aggregator as an historian and siccing it, with the terms of whatever you're interested in, on another field like economics or anthropology or law or botany or physics might be a bit of a crapshoot, but could well also yield some surprising views on your current topic from altogether different perspectives, or bring in new tools or methods that the guys across campus thought of first (and vice versa). That sort of collision is what resulted in classes like this (or, at a broader level, public history in general), of course. I want to see more of that - much more.

It could be interesting to see what kind of mashups would result if people in history and various other fields began taking a more active stance on that sort of thing. Being able to look over other disciplines' shoulders is one of those things that simply can't hurt - especially if we have the tools to do so more easily than we could in the past.

I meant to segue into daemonic applications by talking some about distributed computing research, as much to see if I could find ways to drag history into that particularly awesome and subtle area of knowledge, but as usual my muse has gotten away from me and forced a tome onto your screen. So I do believe I shall keep that for some other time...